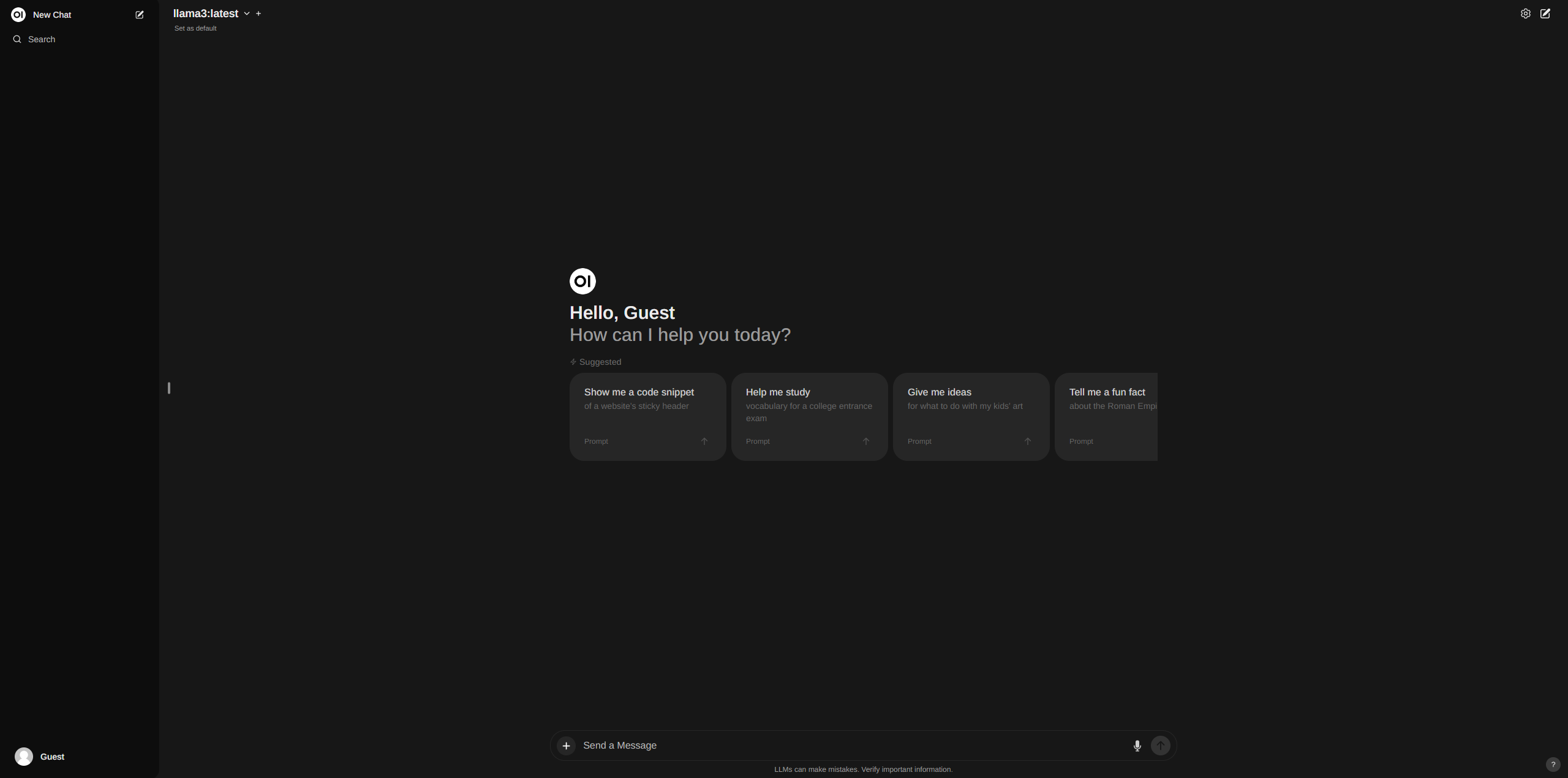

Ollama with Open WebUI

I successfully implemented a local language model (LLM) hosting solution that allows external access to multiple AI models through a user-friendly web interface. This project showcases my skills in cloud technologies, containerization, and AI model deployment.

Key components: Utilized Ollama on my personal computer to host the language models, including llama3, mistral 7b, and others. Deployed Open WebUI using Docker, enabling a seamless integration of multiple LLM models within a single interface. Configured AWS Route 53 to route external traffic to an NGINX reverse proxy running on my home server. Set up NGINX to forward the traffic to my personal computer, which hosts the Ollama backend and the Open WebUI front-end.

This project demonstrates my ability to: - Host and manage AI language models in a local environment - Utilize containerization technologies like Docker for efficient deployment - Configure cloud services (AWS Route 53) for external access - Implement reverse proxy solutions (NGINX) for secure and controlled traffic routing - Integrate multiple AI models into a user-friendly web interface

Through this project, I have gained hands-on experience in deploying and managing AI models, as well as showcasing my skills in cloud technologies, networking, and system integration. It highlights my passion for exploring innovative solutions and my ability to bridge the gap between AI and cloud computing.

You can explore the working demo of this project at: https://chat.vanislim.com/

Project information

- Category: AI/Web App

- Project date: Apr 2024

- Project URL: https://chat.vanislim.com/

- Tools: Route 53, Docker, NGINX, Ollama, Open WebUI

- Technique: AI, Network, Containerization